Money movement vendors are terrible

TL;DR; This is just a rant

I have been talking with a lot of vendors lately, and one thing is clear – they all underperform. Some disappoint more, some less – the vast majority is so bad that I can't even talk about them.

On my current job I am looking for vendors that facilitate money movement, and not a single one provides all capabilities that I am interested in. Some vendors provide more flexibility, others provide more compliance built-in, and others are just a complete disaster that I am not sure how they even stay in business. For instance, people are still talking about embedding inline frames in 2025 – like, waat!?

For real, I am not asking for too much. This is what I need:

- Ready-made compliance (KYC/KYB, AML, BSA) for onboarding and transaction monitoring

- A variety of possible account ownership structures

- Comprehensive money movement capabilities – like traditional ACH or cards

- Pre-built customizable UI components for onboarding – with customer scenarios mapped out. Also, clear guides on documentations!

- Comprehensive web-hooks and reconciliation reporting – some vendors in the market do not even have this!

- Sandbox environments for thorough API testing – I don't even want a portal, just give me an email with the secrets

- Scalability to support future product offerings without additional integrations – super important as changing money movement partners is a drag

- Fast time to market!!!!!!! I don't want to spend ninety days talking to a bank about my compliance program – can you take that risk for me? 🙏

Am I asking for too much!?

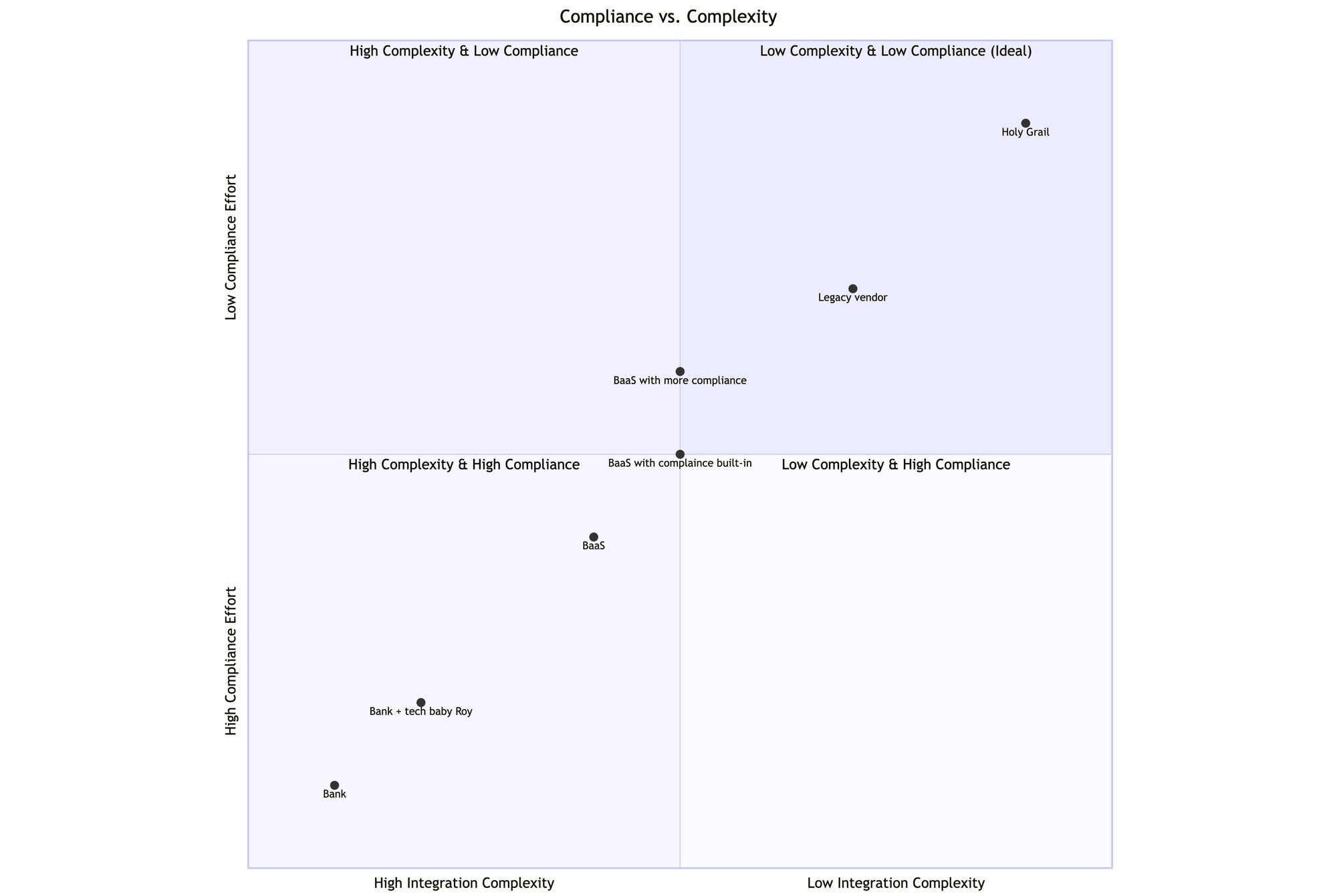

Clearly I am not, and you could pretty much classify the vendors on a quadrant – with a compliance vs complexity chart:

Ok, I might be asking for a lot of things.

Hopefully I get to solve this problem or someone does it before me. Anyway, thanks for tagging along!